No Virtualization Tax for MLPerf Inference v3.0 Using NVIDIA Hopper and Ampere vGPUs and NVIDIA AI Software with vSphere 8.0.1 - VROOM! Performance Blog

Por um escritor misterioso

Descrição

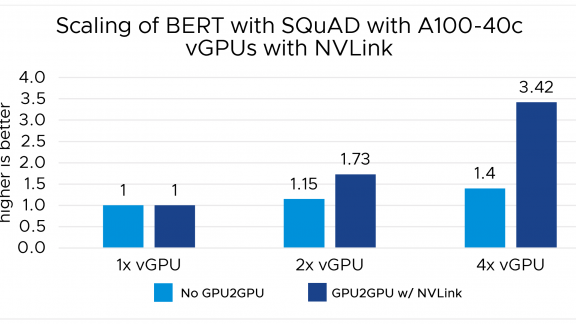

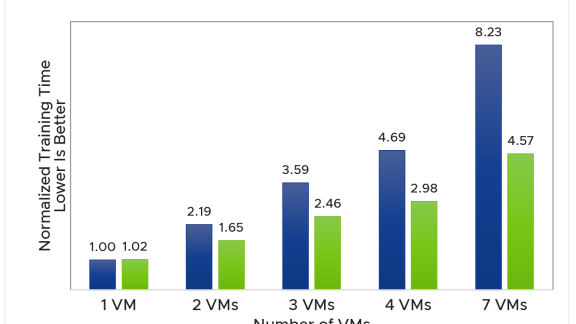

In this blog, we show the MLPerf Inference v3.0 test results for the VMware vSphere virtualization platform with NVIDIA H100 and A100-based vGPUs. Our tests show that when NVIDIA vGPUs are used in vSphere, the workload performance is the same as or better than it is when run on a bare metal system.

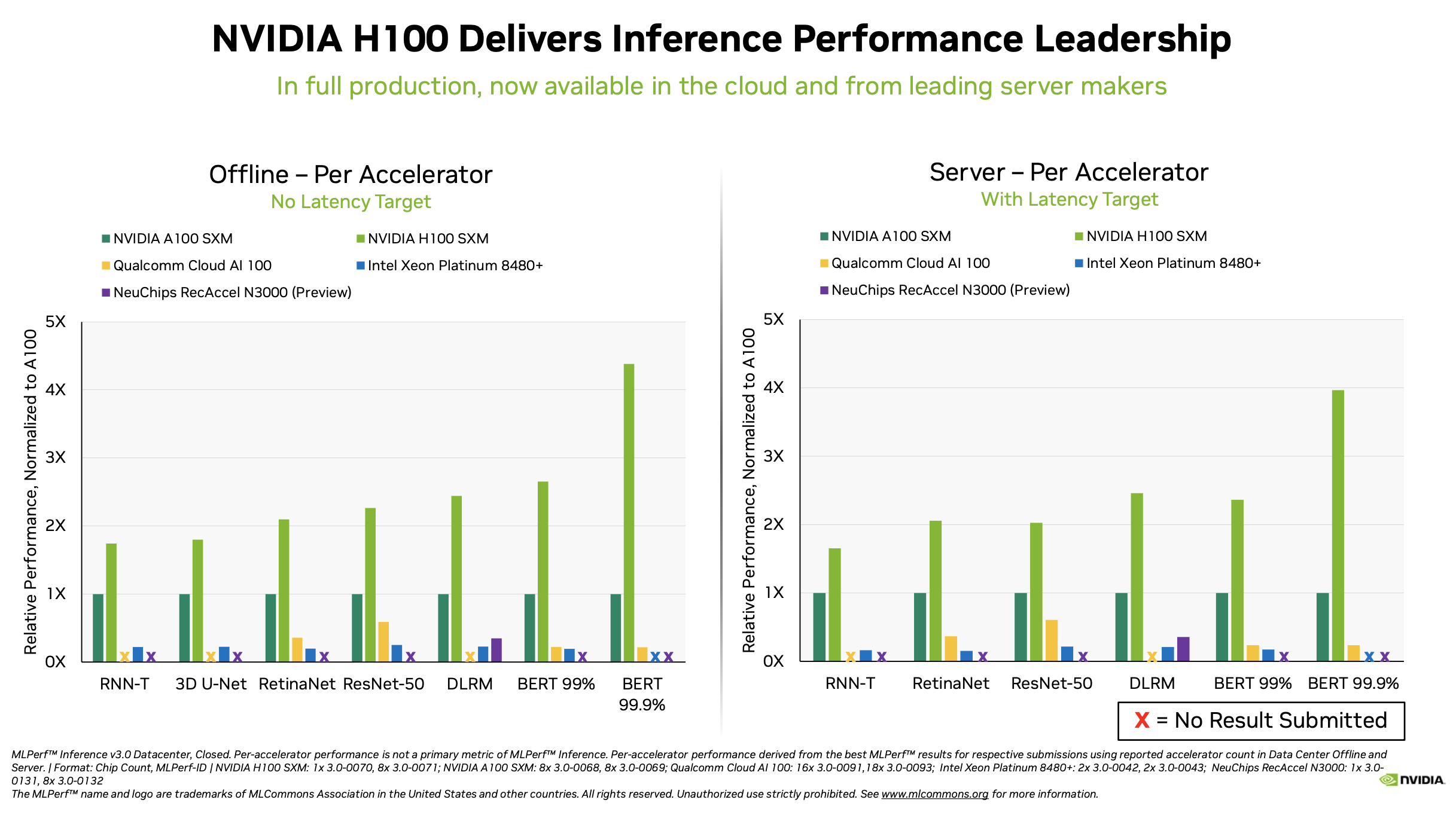

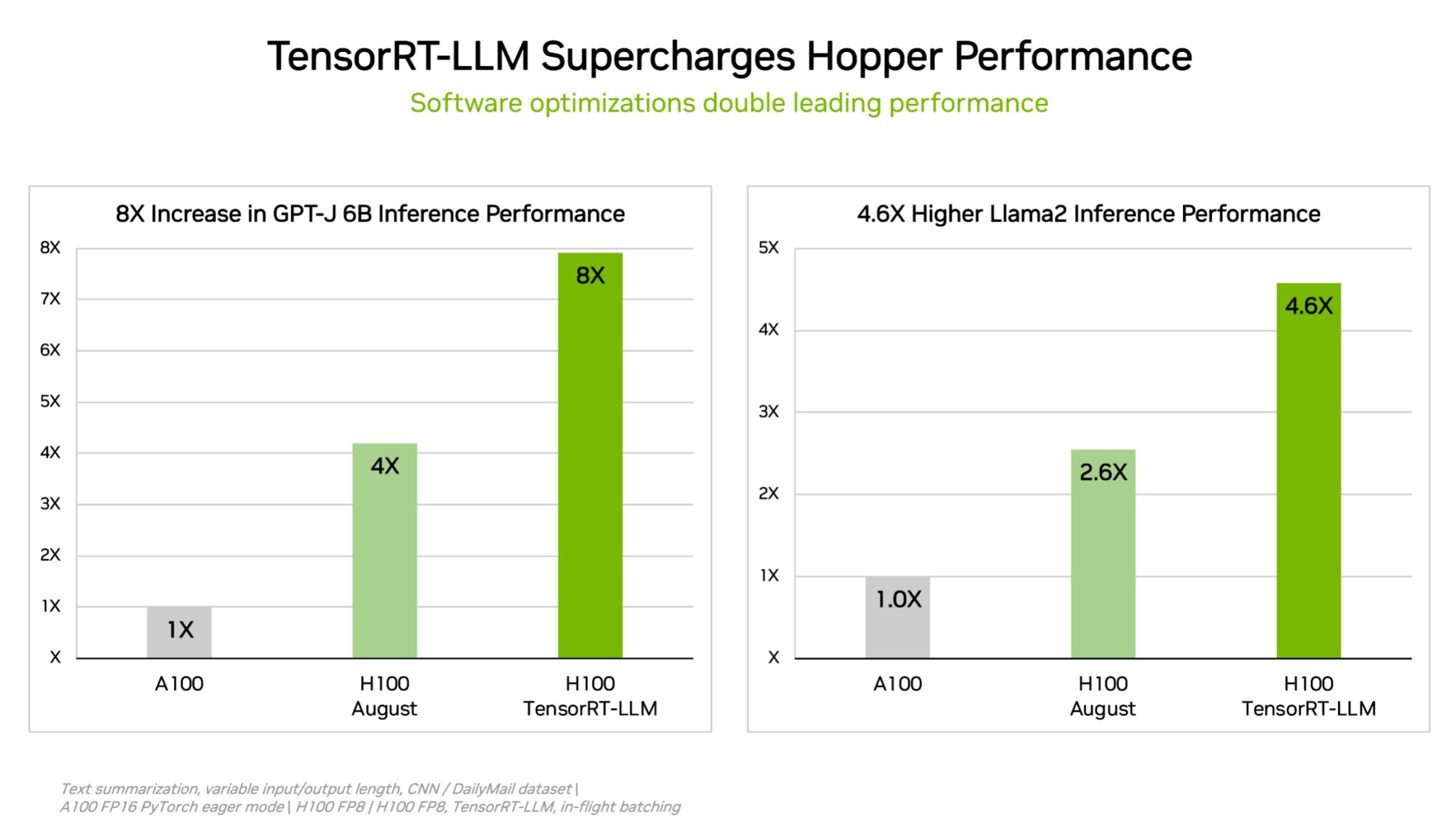

NVIDIA Hopper AI Inference Benchmarks in MLPerf Debut Sets World Record

NVIDIA Hopper AI Inference Benchmarks in MLPerf Debut Sets World Record

No Virtualization Tax for MLPerf Inference v3.0 Using NVIDIA Hopper and Ampere vGPUs and NVIDIA AI Software with vSphere 8.0.1 - VROOM! Performance Blog

Getting the Best Performance on MLPerf Inference 2.0

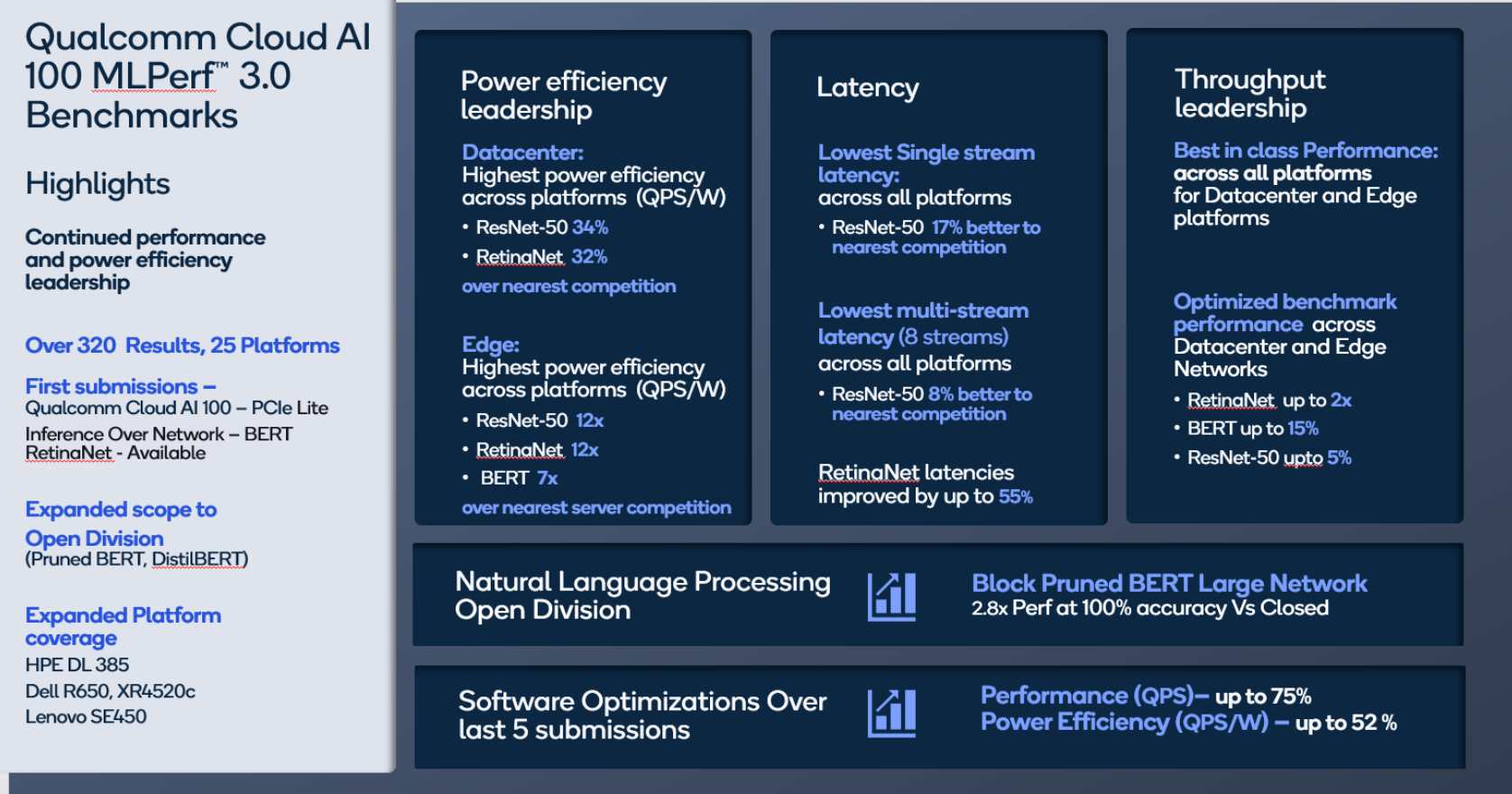

MLPerf Inference 3.0 Highlights - Nvidia, Intel, Qualcomm and…ChatGPT

NVIDIA Posts Big AI Numbers In MLPerf Inference v3.1 Benchmarks With Hopper H100, GH200 Superchips & L4 GPUs

MLPerf Inference 3.0 Highlights - Nvidia, Intel, Qualcomm and…ChatGPT

Setting New Records in MLPerf Inference v3.0 with Full-Stack Optimizations for AI

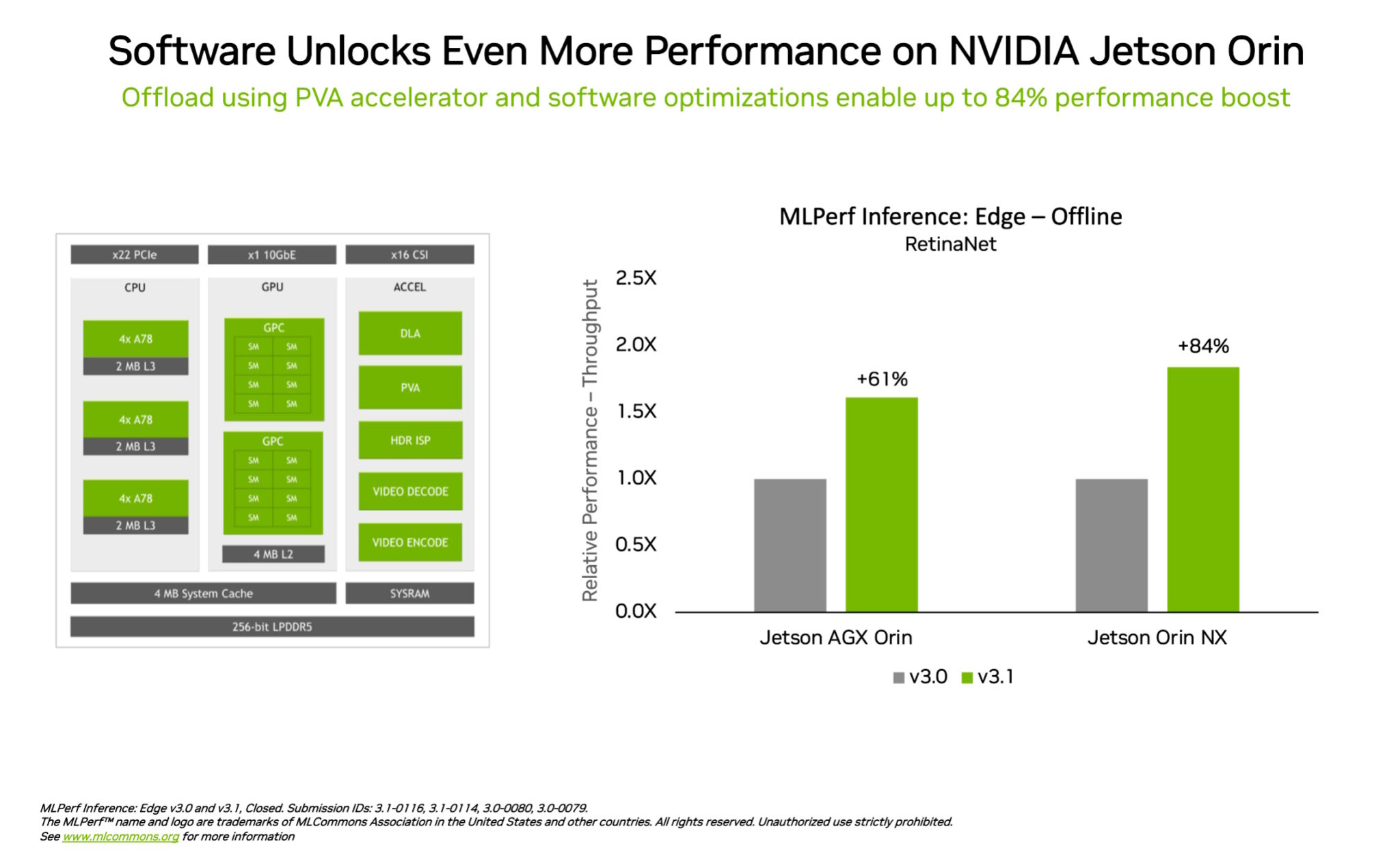

Leading MLPerf Inference v3.1 Results with NVIDIA GH200 Grace Hopper Superchip Debut

GPU – VROOM! Performance Blog

NVIDIA Grace Hopper Superchip Sweeps MLPerf Inference Benchmarks

VMware and NVIDIA solutions deliver high performance in machine learning workloads - VROOM! Performance Blog

de

por adulto (o preço varia de acordo com o tamanho do grupo)

(70).jpg)