Six Dimensions of Operational Adequacy in AGI Projects — LessWrong

Por um escritor misterioso

Descrição

Editor's note: The following is a lightly edited copy of a document written by Eliezer Yudkowsky in November 2017. Since this is a snapshot of Eliez…

Editor's note: The following is a lightly edited copy of a document written by Eliezer Yudkowsky in November 2017. Since this is a snapshot of Eliez…

Editor's note: The following is a lightly edited copy of a document written by Eliezer Yudkowsky in November 2017. Since this is a snapshot of Eliez…

The Wizard of Oz Problem: How incentives and narratives can skew

OpenAI, DeepMind, Anthropic, etc. should shut down. — LessWrong

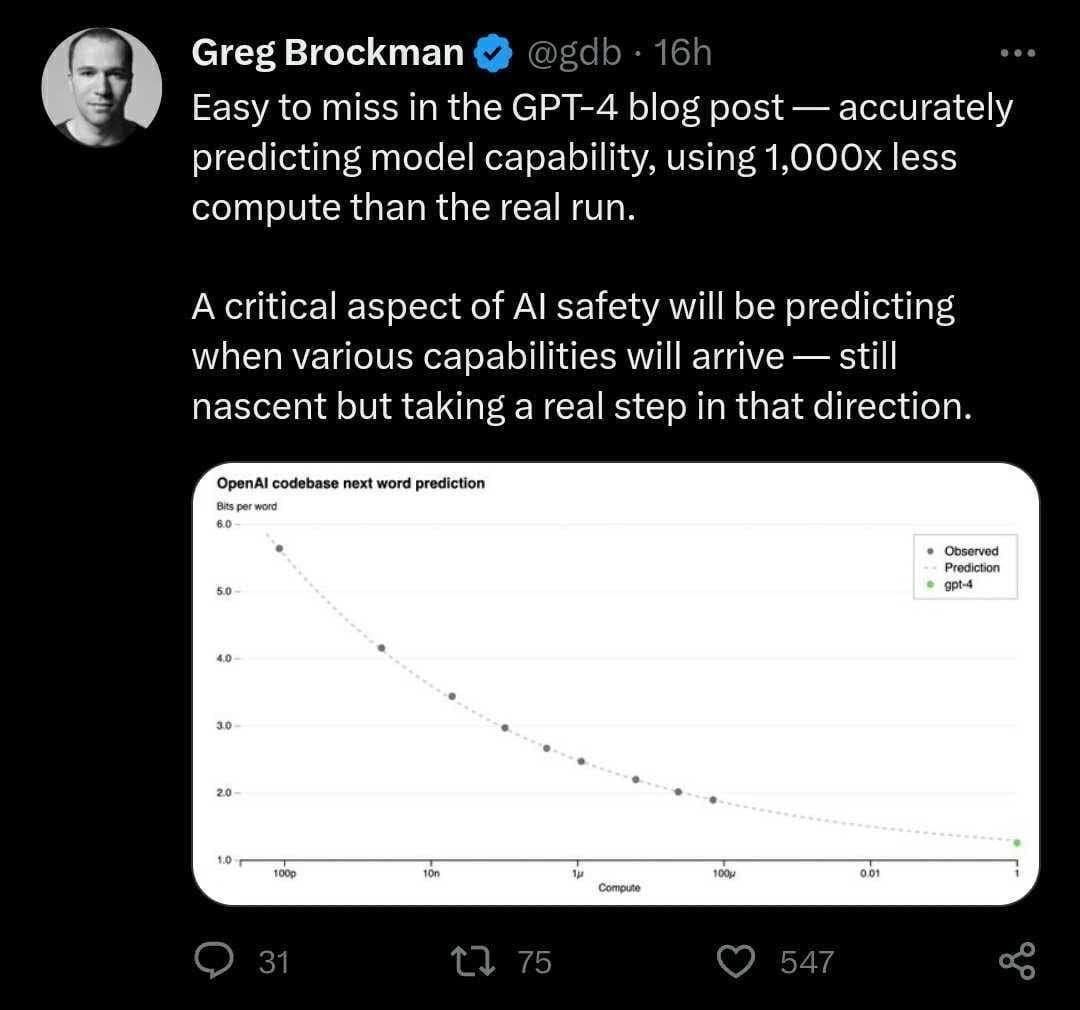

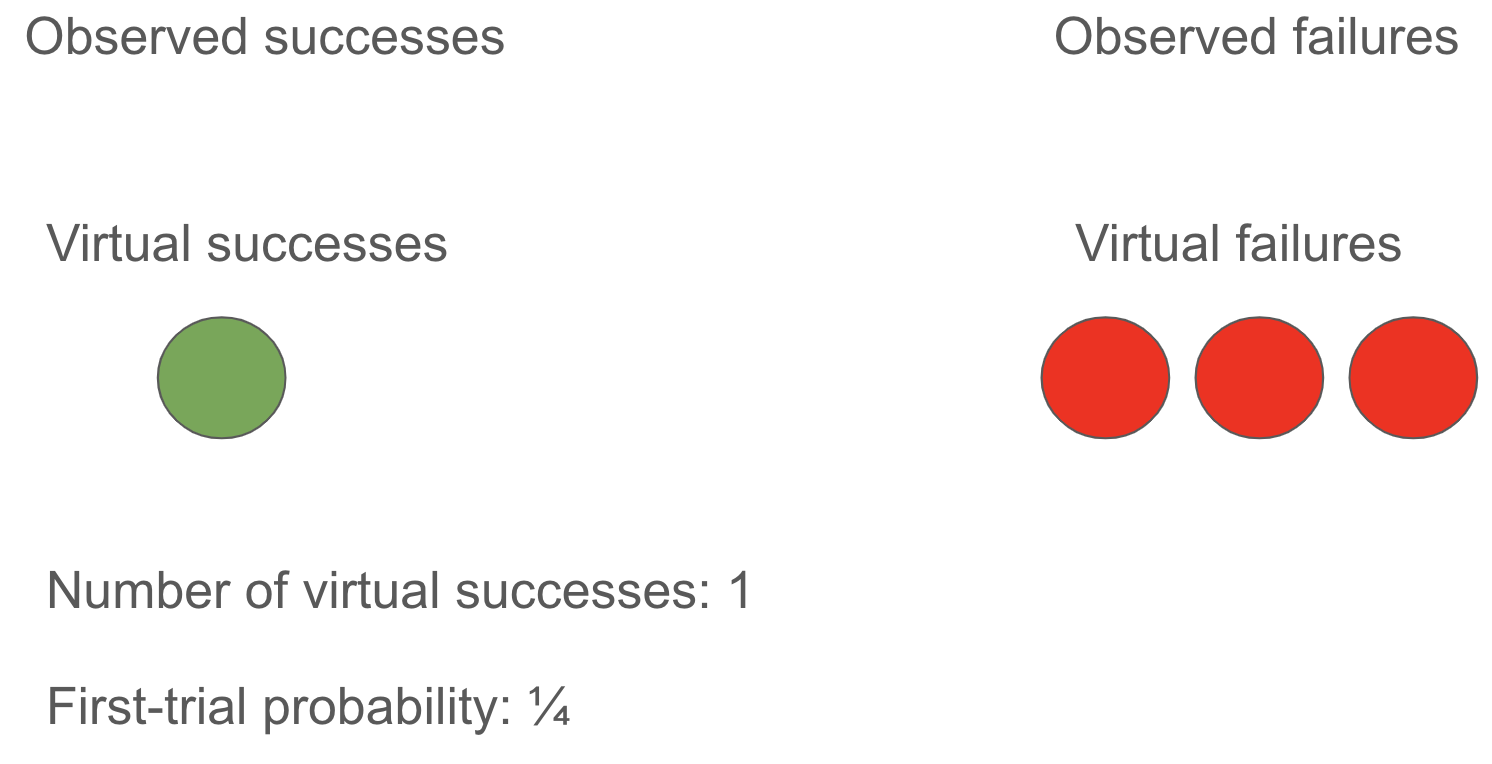

Semi-informative priors over AI timelines

25 of Eliezer Yudkowsky Podcasts Interviews

PDF) Advanced AI Governance: A Literature Review of Problems

Common misconceptions about OpenAI — AI Alignment Forum

Embedded World-Models - Machine Intelligence Research Institute

OpenAI, DeepMind, Anthropic, etc. should shut down. — EA Forum

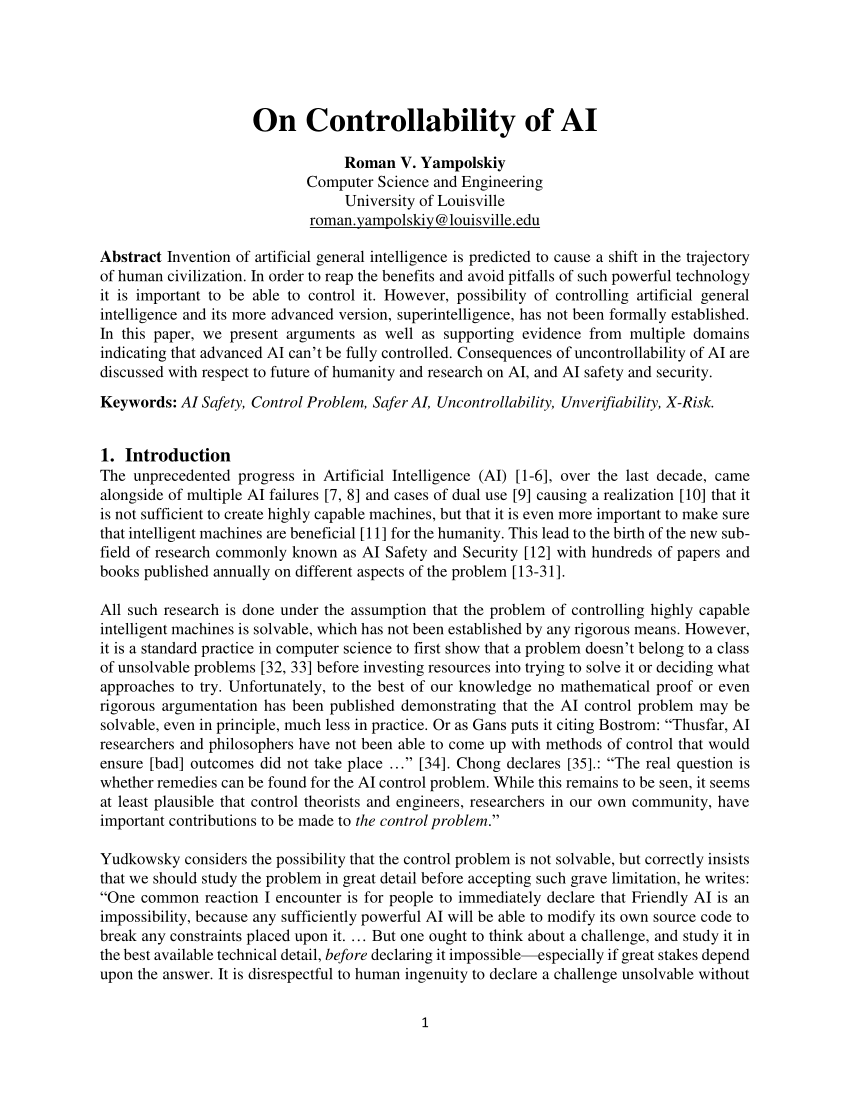

PDF) On Controllability of AI

PDF) CONVERGENCE IN MISSISSIPPI: A SPATIAL APPROACH.

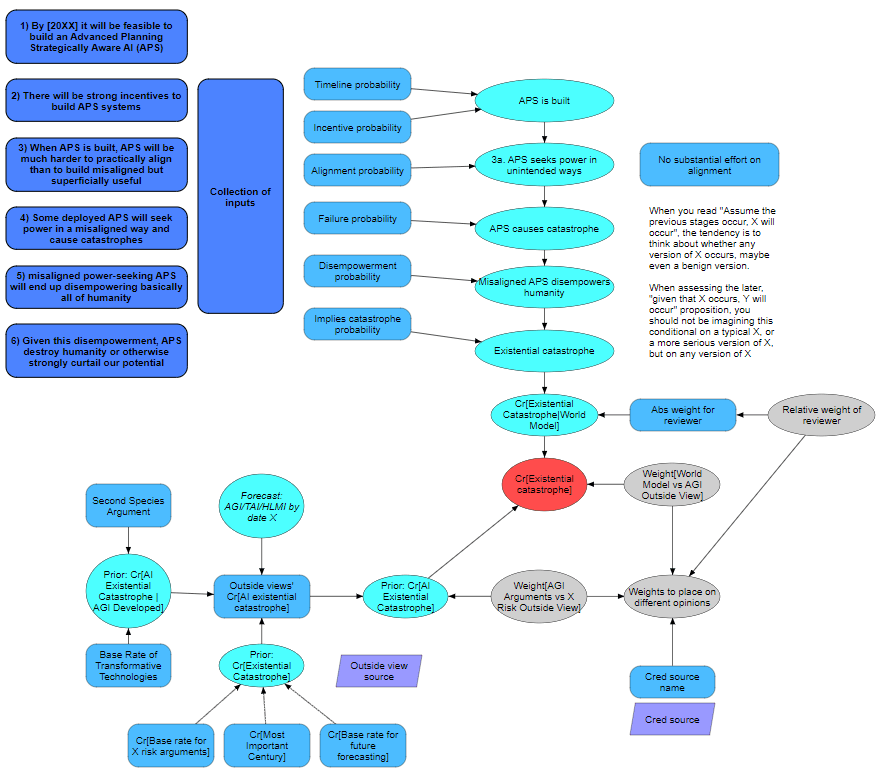

AGI ruin scenarios are likely (and disjunctive) — LessWrong

rationality-ai-zombies/becoming_stronger.tex at master_lite

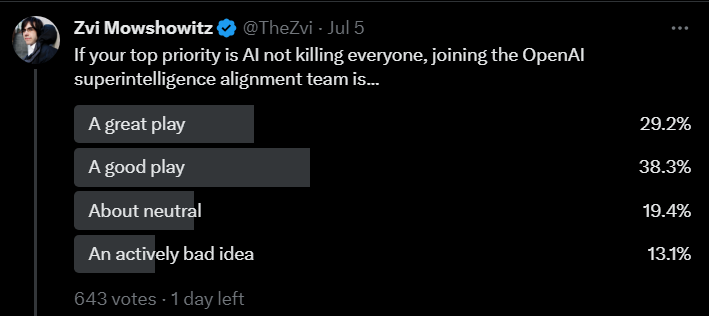

Linkpost] Introducing Superalignment — LessWrong

de

por adulto (o preço varia de acordo com o tamanho do grupo)